Cognitive Assessment

Behaverse comprises a large battery of cognitive tests covering a wide range of domains, including attentional, perceptual, cognitive and motor abilities. This battery consists of multiple Cognitive Assessment Engines which are highly customizable (including the instructions, tutorials and practice blocks) and can instantiate many classic cognitive tests. The Behaverse Cognitive Assessment battery is GDPR compliant, multilingual, multiplatform and supports the collection of fine-grained behavioral data while being robust to network connectivity breaks.

Below we showcase Cognitive Assessment Engines with examples of how they can be used to instantiate classic tests in cognitive psychology.

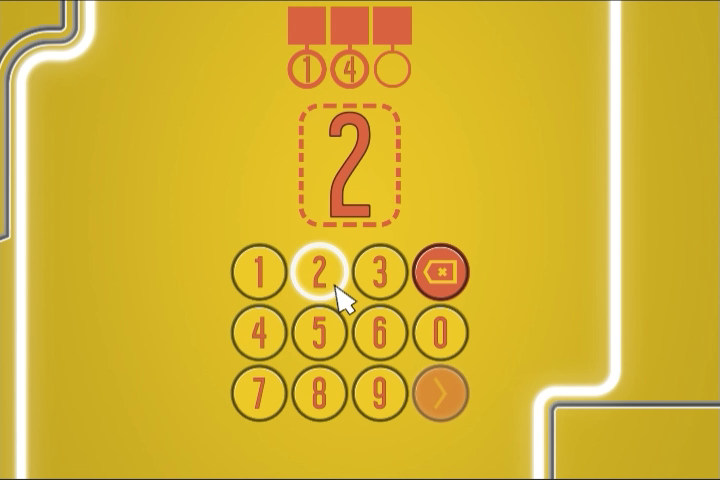

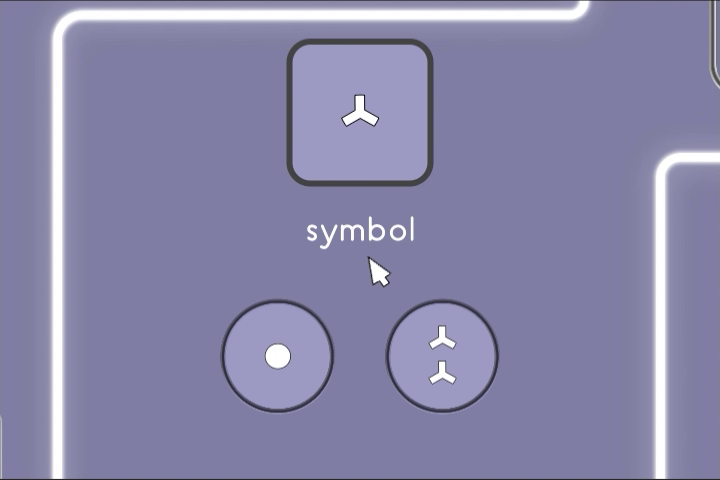

Symbol Span

The symbol span test measures participants’ short term memory. After seeing a sequence of symbols, participants are asked to recall the sequence in order. The length of the sequence is adjusted to fit participants’ abilities.

This test engine can be parameterized to instantiate both forward and backward span tests using digits, letters or abstract symbols among others.

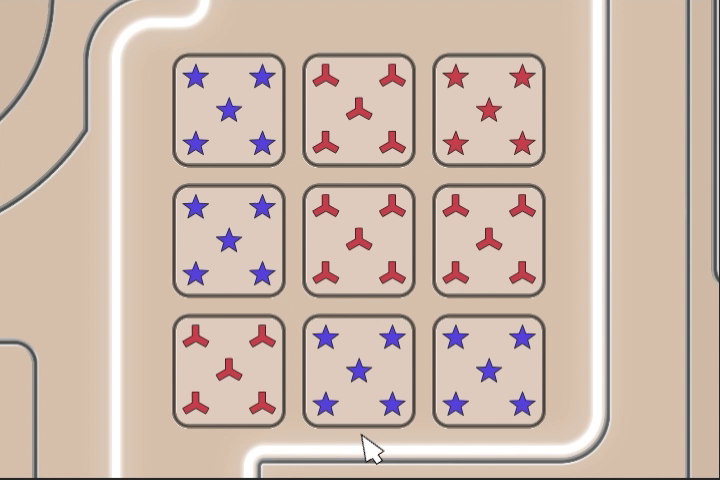

Deductive Reasoning

This is a non-verbal deductive reasoning test. Participants are asked to identify which of the nine patterns shown on the screen is unrelated to the other eight. The difficulty of the problems increase as participants progress in the test.

Matrix Reasoning

This is non-verbal fluid intelligence test based on the famous Raven Progressive Matrices. A matrix of 9 patterns is shown, with one piece missing. Participants are asked to understand the rules that govern the pattern and select the missing piece among a set of options. This test is procedural and can generate a large set of different problems of different kinds.

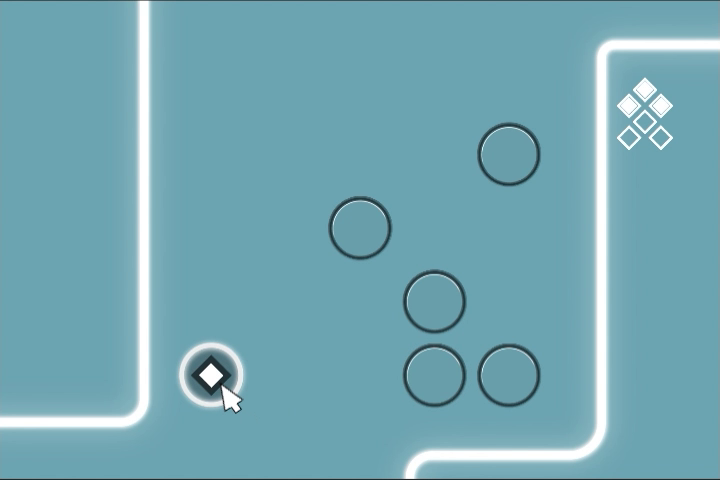

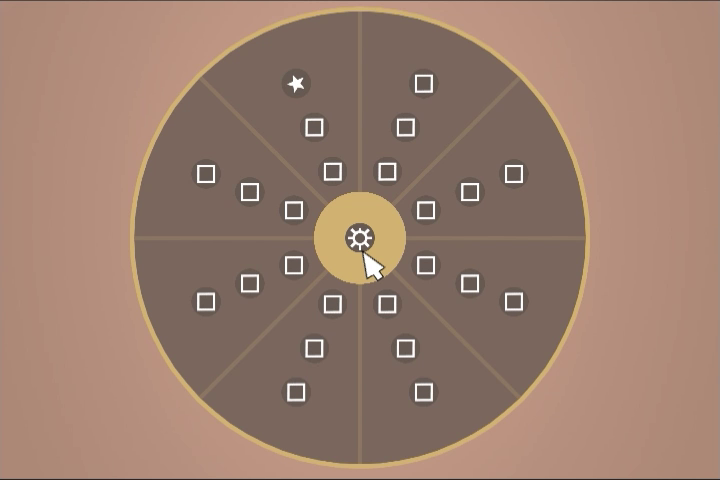

Self-Ordered Search

The self-ordered search task is a visuo-spatial working memory test. A token is successively hidden behind each of the boxes displayed on the screen. Participants click on the boxes to reveal their content and find the token. Once a token is found it is hidden inside one of the boxes that hasn’t yet contained the token. Participants succeed when they are able to find all the tokens without reopening a box they were already told is empty or contained a token in the past. This test is procedurally generated and adapts to participants’ performance.

This engine can instantiate different versions of this test.

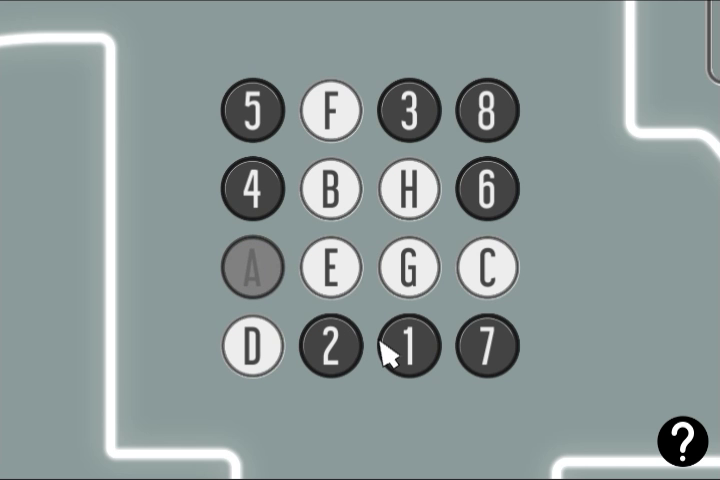

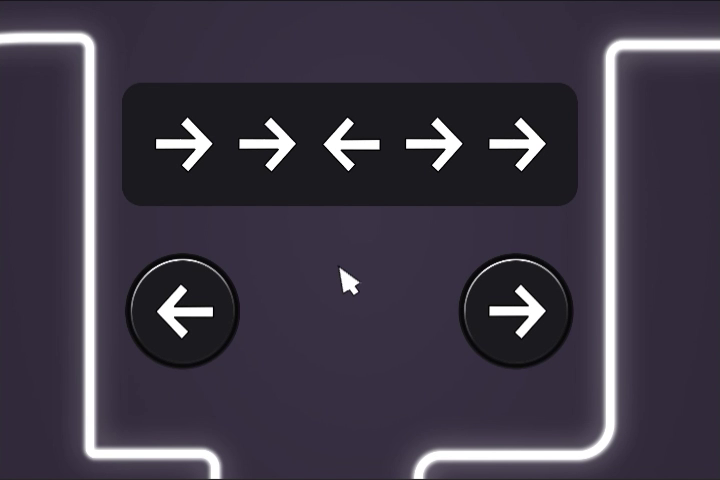

Ordered-Clicks

This engine supports tests requiring people to click on buttons in a particular order. This example shows a variant of the Trail-Making-Test which measures an aspect of executive functions. In this test, participants are asked to click as fast as possible on letters and digits in a specified order.

This engine supports multiple different tests, including a visual memorisation task (photographic memory) and the forward and backward spatial span tests.

Which One

In this test, one of several images or groups of images are shown and participants are asked to classify them into one of two categories. This example shows a variant of the Eriksen flanker test which measures response inhibition. In this test, a central arrow is presented on the screen surrounded by four other arrows that point either in the same or the opposite direction. Participants are asked to report the direction of the central arrow and to ignore the other ones.

This engine supports multiple different tests, including the Simon test and the Bivalent Shape test.

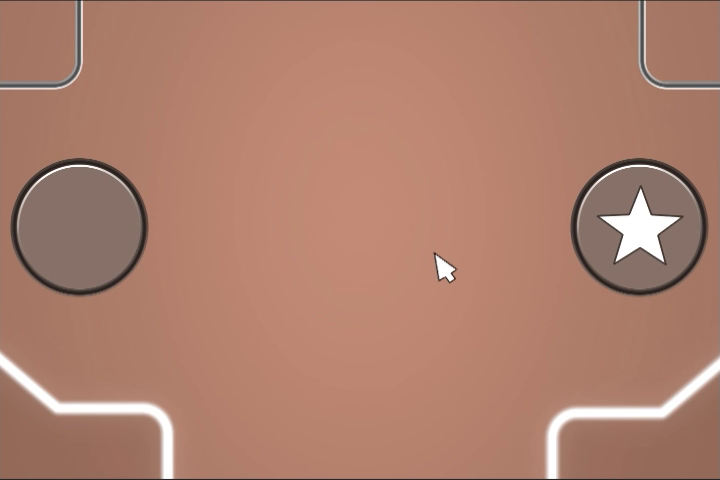

Spatial Attention Cueing

This engine supports tasks that use different cues to direct peoples’ attention to particular locations on the screen. This example shows a variant of the anti-saccade test which involves attentional control. In this task participants have to click on the button that is in the opposite direction of a salient flash that automatically attracts attention.

This engine supports a variety of attentional cueing paradigms (exogenous and/or endogenous cueing) as well as a task-switching test.

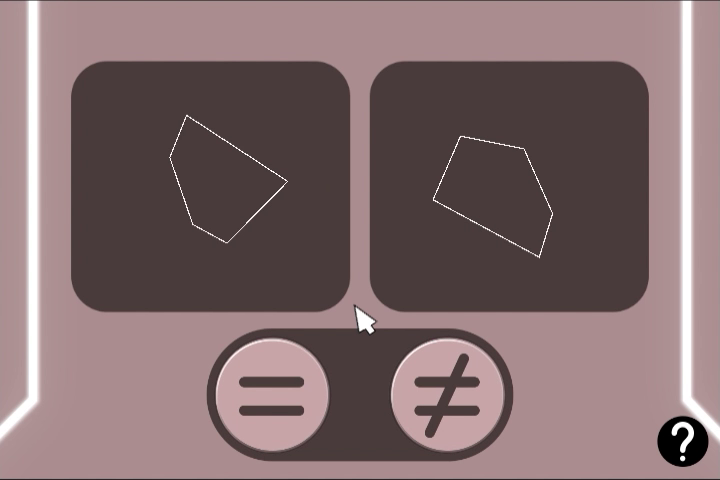

Polygon Comparison

This engine supports tasks that require participants to compare two visual shapes. This example shows a variant of the mental rotation test: participants are shown two polygons with one of the polygons being randomly rotated and possibly distorted. Participants need to mentally rotate the polygons to determine whether or not they are the same.

<p>This engine supports multiple tests focusing on perceptual abilities (e.g., comparing shapes in the presence of distractors).</p>Target Hit

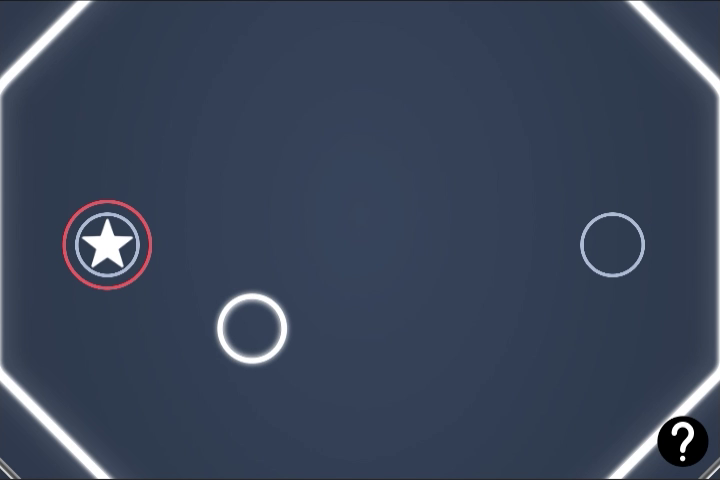

This engine supports tasks where participants are required to rapidly move a disk into one of multiple locations on the screen. This example shows a variant of the stop-signal test: when a red disk appears around the aimed location, participants should stop their ongoing action.

This engine supports multiple variants of the stop-signal task as well other tasks (e.g., simple and choice reaching tasks).

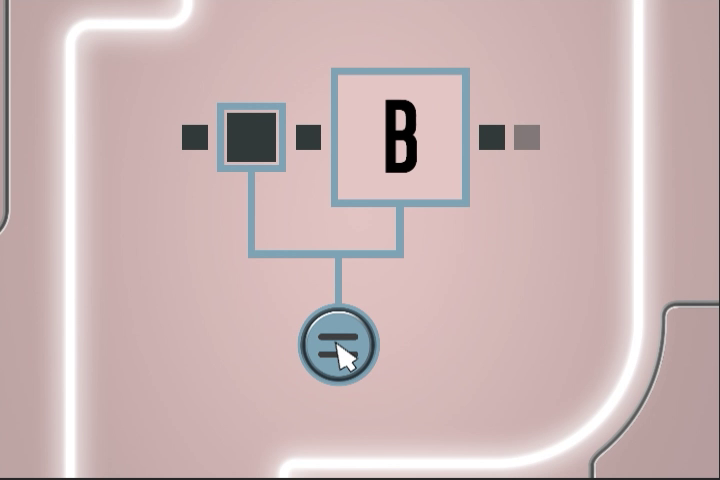

Belval Card Sorting

This engine supports tasks where a multi-feature stimulus (top of the screen) must be classified along one of its features by clicking on corresponding buttons. This example shows a variant of the task-switching test which measures aspects of cognitive control. In this test, participants are asked to classify stimuli either based on their number or their shape depending on the text that is displayed on the screen center.

This engine supports multiple variants of the task-switching test as well as variants of the Wisconsin Card Sorting test.

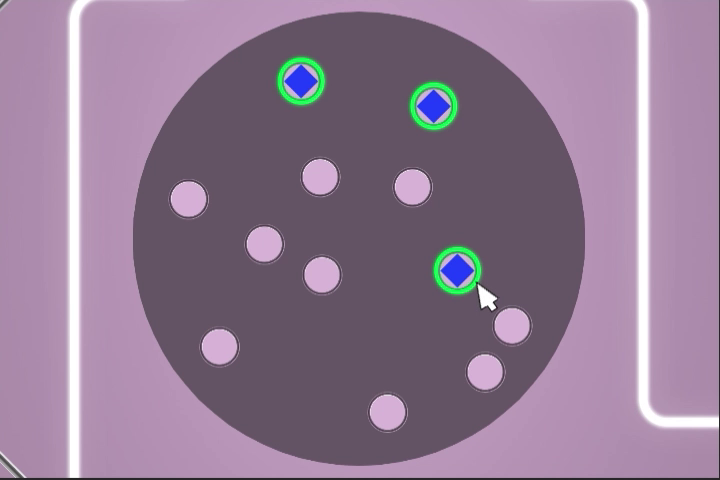

Multiple Object Tracking

Performing the multiple object tracking test (MOT) requires sustained attentional control. In this task a set of dots move randomly across the screen, some of which have been highlighted as targets and participants are asked to track their positions using their attention.

This engine supports multiple variants of the MOT (e.g., report all target locations on each trial by clicking on them) as well as a short-term visual memory task.

Distributed Attention

This is a variant of the Useful field of view test which requires participants to identify a symbol presented on the screen center and to locate a symbol in the periphery while at the same time ignoring visual distractors. This test is thought to rely on divided and selective attention.

This engine supports multiple variants of this test, including different sets of symbols.

Stimulus-Response Mapping

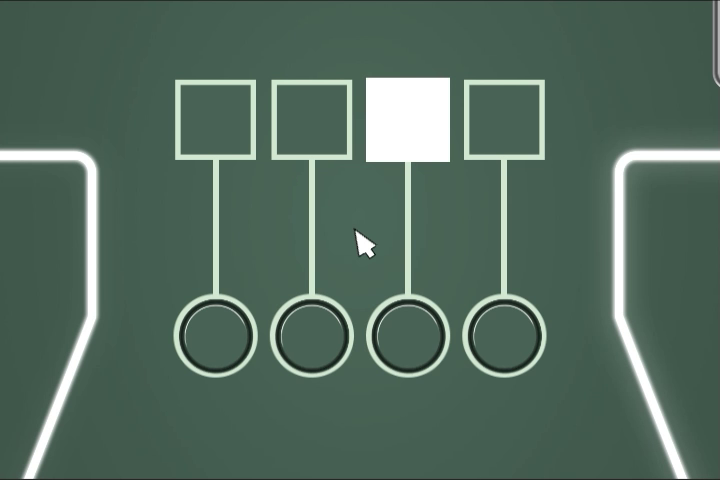

This test is similar to the Serial Reaction Time Task (SRTT) which is used to measure implicit motor learning. In this test, light-boxes are linked to response buttons. Each time a box lights up, participants are asked to press the button connected to it as fast as possible.

This engine supports a wide range of tests, including simple and multiple choice tests and a variant of the test of variables of attention (TOVA) which is classically used to assess impulsivity and inattention.

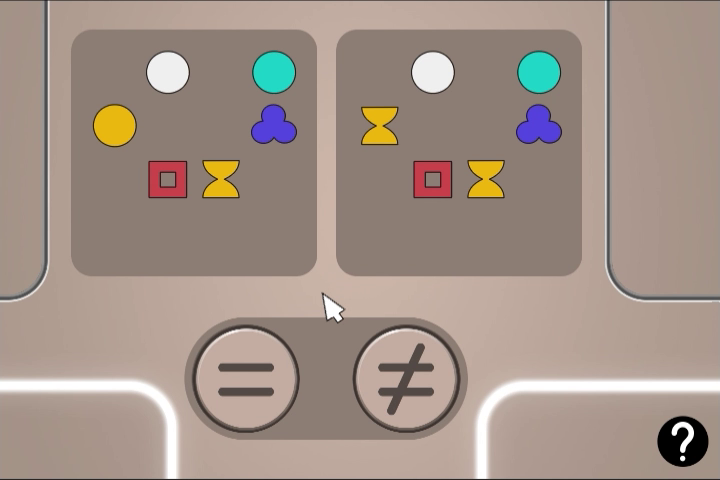

Symbol Matrix Comparison

In this visual search test, participants are shown two sets of symbols which are either exactly the same or differ by one symbol (which may have a different color, shape or location across the two images).

This engine supports several variants of this test, including a mental rotation version with and without indication of the rotation angle.

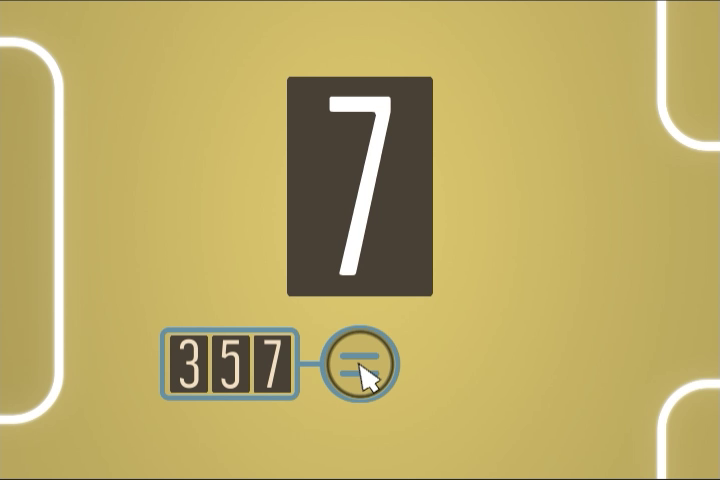

Sequence Match

In this test a sequence of symbols are presented in succession and participants are asked to detect each time a particular sequence of symbols occurred (e.g., 3-5-7).

This engine supports multiple tests, including a multistream version of the Rapid Visual Processing Test, the AX-CPT (with and without distractors), and the sustained attention to response task.

N-back

Performance on this N-back task is thought to rely on working memory and sustained attention. Participants view a stream of stimuli (in this example, letters) and have to respond for each whether or not it was the same as the one shown N steps earlier (in this example, N=2).

This engine supports multiple variants of test, including a variety of stimulus sets (e.g., digits, symbols, spatial locations) as well as multiple simultaneous N-back streams.